Dongfu Jiang (姜东甫)

I’m a second-year CS Ph.D. student of TIGER-Lab at University of Waterloo, advised by Prof. Wenhu Chen. Previously, I received my bachelor degree in Computer Science at Zhejiang University. I am currently interning at NVIDIA ADLR at Santa Clara.

My research focus on LLM/VLM-post training, including alignment, evaluation, and applications. Recently I am particularly interested in reinforcement learning for reasoning tasks and how they can better use tools to solve problems. I built VerlTool as an initial exploration of this direction with a neat and easy-to-use codebase.

News

| Aug 18, 2025 | Starting my internship at Nvidia ADLR! |

|---|---|

| Jun 1, 2025 | Anouncing VerlTool, see X post and Github. Paper coming soon. |

| May 20, 2025 | Release General-Reasoner to elicit general reasoning ability beyond math! |

| Mar 8, 2025 | 🎉 Will join NVIDIA as an intern this summer! See you at Santa Clara. |

| Feb 3, 2025 | We release 🂡 AceCoder and the SoTA reward model for coding! |

Selected publications

2025

- ArxivXuan He*, Dongfu Jiang*, Ping Nie, Minghao Liu, Zhengxuan Jiang, Mingyi Su, Wentao Ma, Junru Lin, and 16 more authorsIn arxiv preprint, Sep 2025

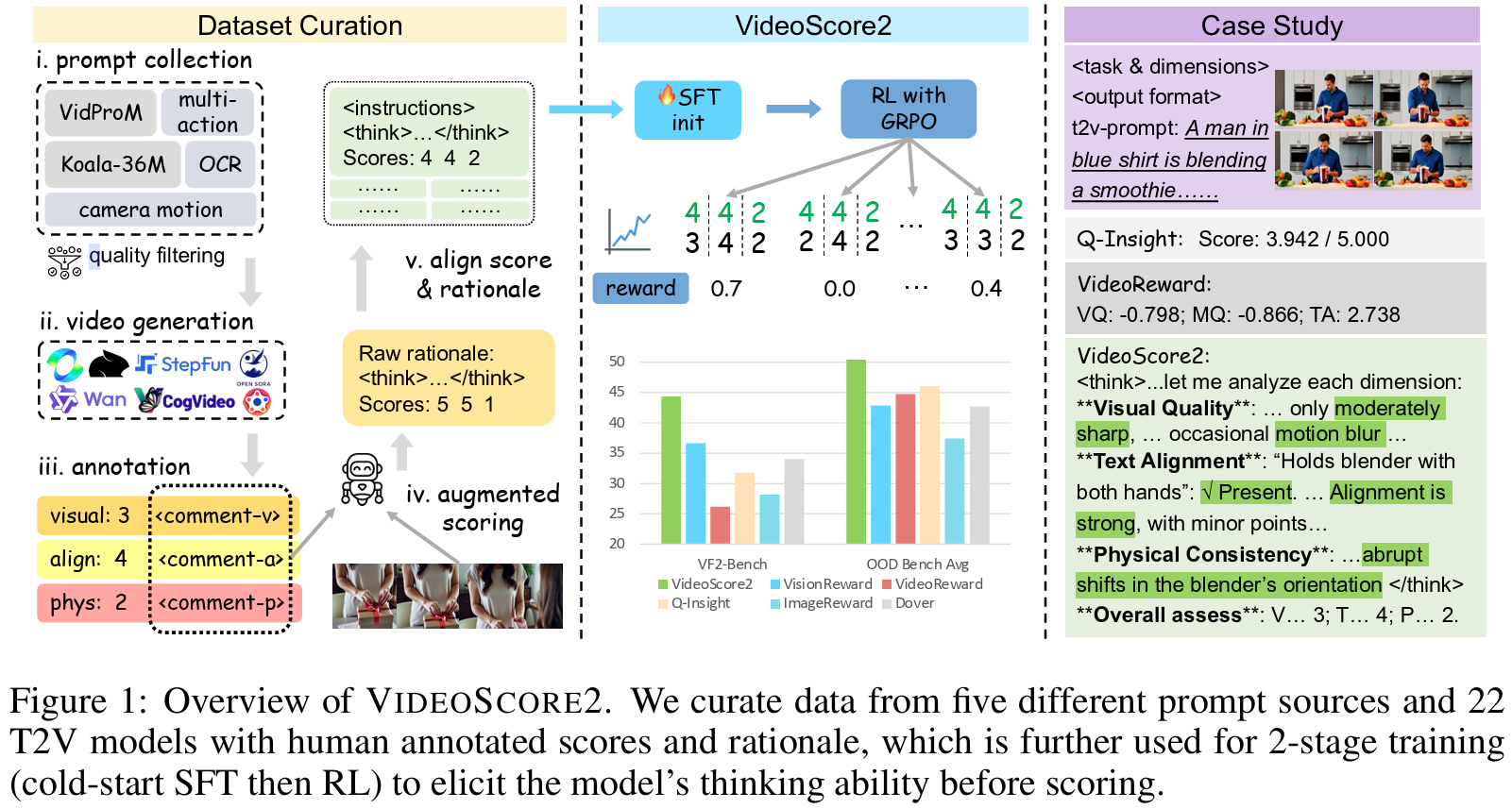

Recent advances in text-to-video generation have produced increasingly realistic and diverse content, yet evaluating such videos remains a fundamental challenge due to their multi-faceted nature encompassing visual quality, semantic alignment, and physical consistency. Existing evaluators and reward models are limited to single opaque scores, lack interpretability, or provide only coarse analysis, making them insufficient for capturing the comprehensive nature of video quality assessment. We present VideoScore2, a multi-dimensional, interpretable, and human-aligned framework that explicitly evaluates visual quality, text-to-video alignment, and physical/common-sense consistency while producing detailed chain-of-thought rationales. Our model is trained on a large-scale dataset VideoFeedback2 containing 27,168 human-annotated videos with both scores and reasoning traces across three dimensions, using a two-stage pipeline of supervised fine-tuning followed by reinforcement learning with Group Relative Policy Optimization (GRPO) to enhance analytical robustness. Extensive experiments demonstrate that VideoScore2 achieves superior performance with 44.35 (+5.94) accuracy on our in-domain benchmark VideoScore-Bench-v2 and 50.37 (+4.32) average performance across four out-of-domain benchmarks (VideoGenReward-Bench, VideoPhy2, etc), while providing interpretable assessments that bridge the gap between evaluation and controllable generation through effective reward modeling for Best-of-N sampling. Project Page: this https URL

@inproceedings{He2025VideoScore2TB, title = {VideoScore2: Think before You Score in Generative Video Evaluation}, author = {He, Xuan and Jiang, Dongfu and Nie, Ping and Liu, Minghao and Jiang, Zhengxuan and Su, Mingyi and Ma, Wentao and Lin, Junru and Ye, Chun and Lu, Yi and Wu, Keming and Schneider, Benjamin and Do, Quy Duc and Li, Zhuofeng and Jia, Yiming and Zhang, Yuxuan and Cheng, Guo and Wang, Haozhe and Zhou, Wangchunshu and Lin, Qunshu and Zhang, Yuanxing and Zhang, Ge and Huang, Wenhao and Chen, Wenhu}, year = {2025}, month = sep, booktitle = {arxiv preprint}, github = {TIGER-AI-Lab/VideoScore2}, huggingface = {https://huggingface.co/collections/TIGER-Lab/videoscore2-68dbe2618ceec197d39fe19d}, twitter = {https://x.com/WenhuChen/status/1973546309106720908}, selected = true, num_co_first_author = {2}, }

- ArxivDongfu Jiang*, Yi Lu*, Zhuofeng Li*, Zhiheng Lyu*, Ping Nie, Haozhe Wang, Alex Su, Hui Chen, and 4 more authorsIn arxiv preprint, Feb 2025

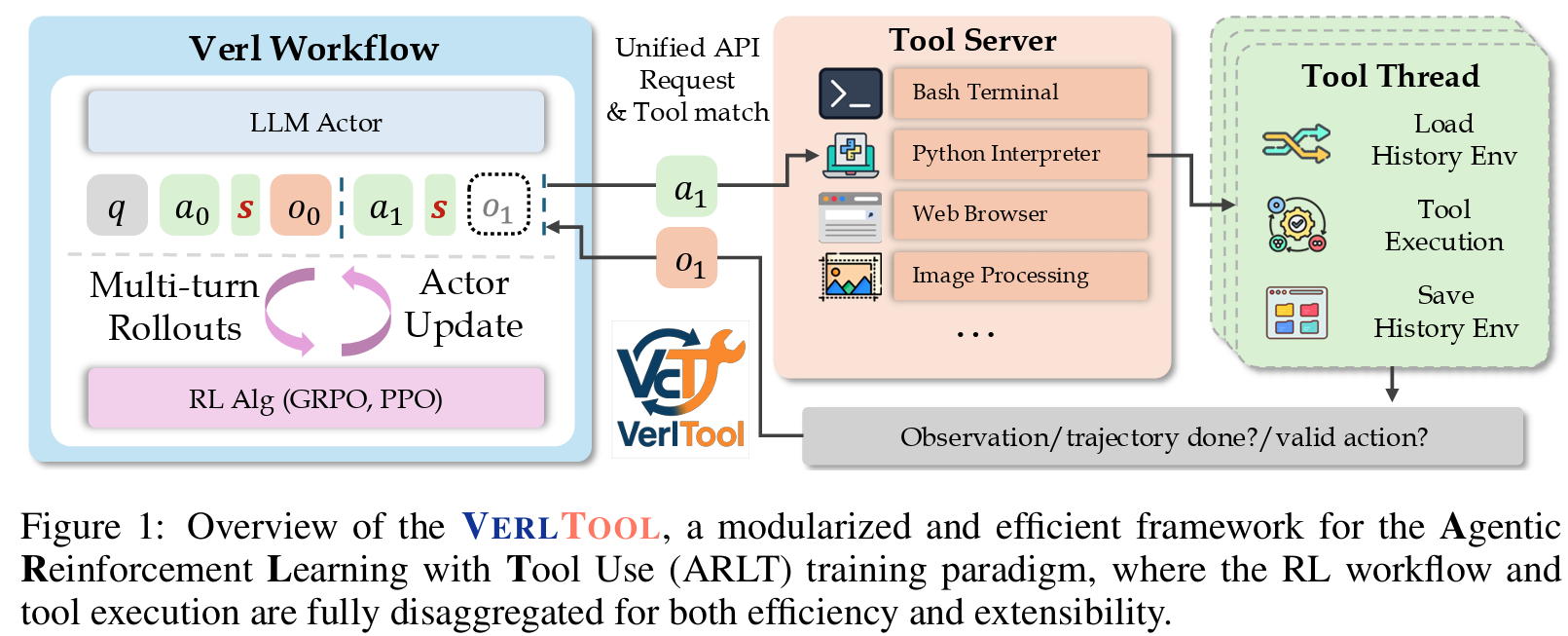

Reinforcement Learning with Verifiable Rewards (RLVR) has demonstrated success in enhancing LLM reasoning capabilities, but remains limited to single-turn interactions without tool integration. While recent Agentic Reinforcement Learning with Tool use (ARLT) approaches have emerged to address multi-turn tool interactions, existing works develop task-specific codebases that suffer from fragmentation, synchronous execution bottlenecks, and limited extensibility across domains. These inefficiencies hinder broader community adoption and algorithmic innovation. We introduce VerlTool, a unified and modular framework that addresses these limitations through systematic design principles. VerlTool provides four key contributions: (1) upstream alignment with VeRL ensuring compatibility and simplified maintenance, (2) unified tool management via standardized APIs supporting diverse modalities including code execution, search, SQL databases, and vision processing, (3) asynchronous rollout execution achieving near 2x speedup by eliminating synchronization bottlenecks, and (4) comprehensive evaluation demonstrating competitive performance across 6 ARLT domains. Our framework formalizes ARLT as multi-turn trajectories with multi-modal observation tokens (text/image/video), extending beyond single-turn RLVR paradigms. We train and evaluate models on mathematical reasoning, knowledge QA, SQL generation, visual reasoning, web search, and software engineering tasks, achieving results comparable to specialized systems while providing unified training infrastructure. The modular plugin architecture enables rapid tool integration requiring only lightweight Python definitions, significantly reducing development overhead and providing a scalable foundation for tool-augmented RL research. Our code is open-sourced at this https URL.

@inproceedings{Jiang2025VerlToolTH, title = {VerlTool: Towards Holistic Agentic Reinforcement Learning with Tool Use}, author = {Jiang, Dongfu and Lu, Yi and Li, Zhuofeng and Lyu, Zhiheng and Nie, Ping and Wang, Haozhe and Su, Alex and Chen, Hui and Zou, Kai and Du, Chao and Pang, Tianyu and Chen, Wenhu}, year = {2025}, month = feb, booktitle = {arxiv preprint}, github = {TIGER-AI-Lab/verltool}, twitter = {https://x.com/DongfuJiang/status/1963160374209134608}, selected = true, num_co_first_author = {4}, }

- ArxivDongfu Jiang*, Yi Lu*, Zhuofeng Li*, Zhiheng Lyu*, Ping Nie, Haozhe Wang, Alex Su, Hui Chen, and 4 more authorsIn arxiv preprint, Feb 2025

Reinforcement Learning with Verifiable Rewards (RLVR) has demonstrated success in enhancing LLM reasoning capabilities, but remains limited to single-turn interactions without tool integration. While recent Agentic Reinforcement Learning with Tool use (ARLT) approaches have emerged to address multi-turn tool interactions, existing works develop task-specific codebases that suffer from fragmentation, synchronous execution bottlenecks, and limited extensibility across domains. These inefficiencies hinder broader community adoption and algorithmic innovation. We introduce VerlTool, a unified and modular framework that addresses these limitations through systematic design principles. VerlTool provides four key contributions: (1) upstream alignment with VeRL ensuring compatibility and simplified maintenance, (2) unified tool management via standardized APIs supporting diverse modalities including code execution, search, SQL databases, and vision processing, (3) asynchronous rollout execution achieving near 2x speedup by eliminating synchronization bottlenecks, and (4) comprehensive evaluation demonstrating competitive performance across 6 ARLT domains. Our framework formalizes ARLT as multi-turn trajectories with multi-modal observation tokens (text/image/video), extending beyond single-turn RLVR paradigms. We train and evaluate models on mathematical reasoning, knowledge QA, SQL generation, visual reasoning, web search, and software engineering tasks, achieving results comparable to specialized systems while providing unified training infrastructure. The modular plugin architecture enables rapid tool integration requiring only lightweight Python definitions, significantly reducing development overhead and providing a scalable foundation for tool-augmented RL research. Our code is open-sourced at this https URL.

@inproceedings{Jiang2025VerlToolTI, title = {VerlTool: Towards Holistic Agentic Reinforcement Learning with Tool Use}, author = {Jiang, Dongfu and Lu, Yi and Li, Zhuofeng and Lyu, Zhiheng and Nie, Ping and Wang, Haozhe and Su, Alex and Chen, Hui and Zou, Kai and Du, Chao and Pang, Tianyu and Chen, Wenhu}, year = {2025}, month = feb, booktitle = {arxiv preprint}, github = {TIGER-AI-Lab/verltool}, twitter = {https://x.com/DongfuJiang/status/1963160374209134608}, selected = true, num_co_first_author = {4}, }

- ArxivHuaye Zeng*, Dongfu Jiang*, Haozhe Wang, Ping Nie, Xiaotong Chen, and Wenhu ChenIn arxiv preprint, Feb 2025

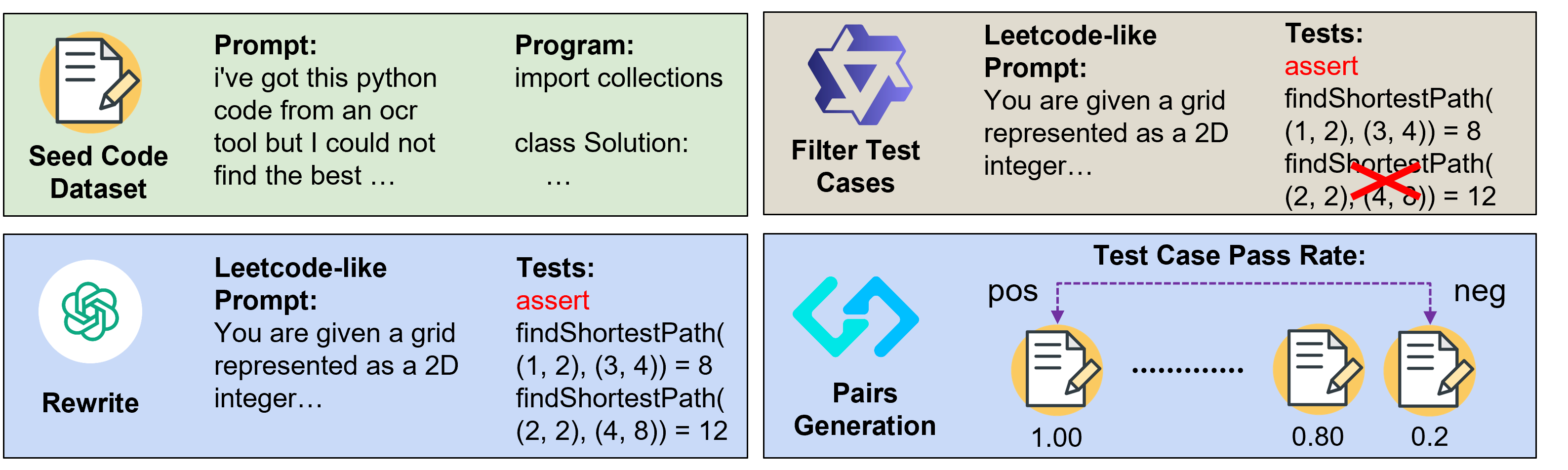

Most progress in recent coder models has been driven by supervised fine-tuning (SFT), while the potential of reinforcement learning (RL) remains largely unexplored, primarily due to the lack of reliable reward data/model in the code domain. In this paper, we address this challenge by leveraging automated large-scale test-case synthesis to enhance code model training. Specifically, we design a pipeline that generates extensive (question, test-cases) pairs from existing code data. Using these test cases, we construct preference pairs based on pass rates over sampled programs to train reward models with Bradley-Terry loss. It shows an average of 10-point improvement for Llama-3.1-8B-Ins and 5-point improvement for Qwen2.5-Coder-7B-Ins through best-of-32 sampling, making the 7B model on par with 236B DeepSeek-V2.5. Furthermore, we conduct reinforcement learning with both reward models and test-case pass rewards, leading to consistent improvements across HumanEval, MBPP, BigCodeBench, and LiveCodeBench (V4). Notably, we follow the R1-style training to start from Qwen2.5-Coder-base directly and show that our RL training can improve model on HumanEval-plus by over 25% and MBPP-plus by 6% for merely 80 optimization steps. We believe our results highlight the huge potential of reinforcement learning in coder models.

@inproceedings{Zeng2025ACECODERAC, title = {ACECODER: Acing Coder RL via Automated Test-Case Synthesis}, author = {Zeng, Huaye and Jiang, Dongfu and Wang, Haozhe and Nie, Ping and Chen, Xiaotong and Chen, Wenhu}, booktitle = {arxiv preprint}, month = feb, year = {2025}, github = {TIGER-AI-Lab/AceCoder}, huggingface = {https://huggingface.co/collections/TIGER-Lab/acecoder-67a16011a6c7d65cad529eba}, twitter = {https://x.com/DongfuJiang/status/1886828310841204859}, selected = true, num_co_first_author = {2}, }

2024

- Xuan He*, Dongfu Jiang*, Ge Zhang, Max Ku, Achint Soni, Sherman Siu, Haonan Chen, Abhranil Chandra, and 11 more authorsIn Proceedings of EMNLP, Nov 2024

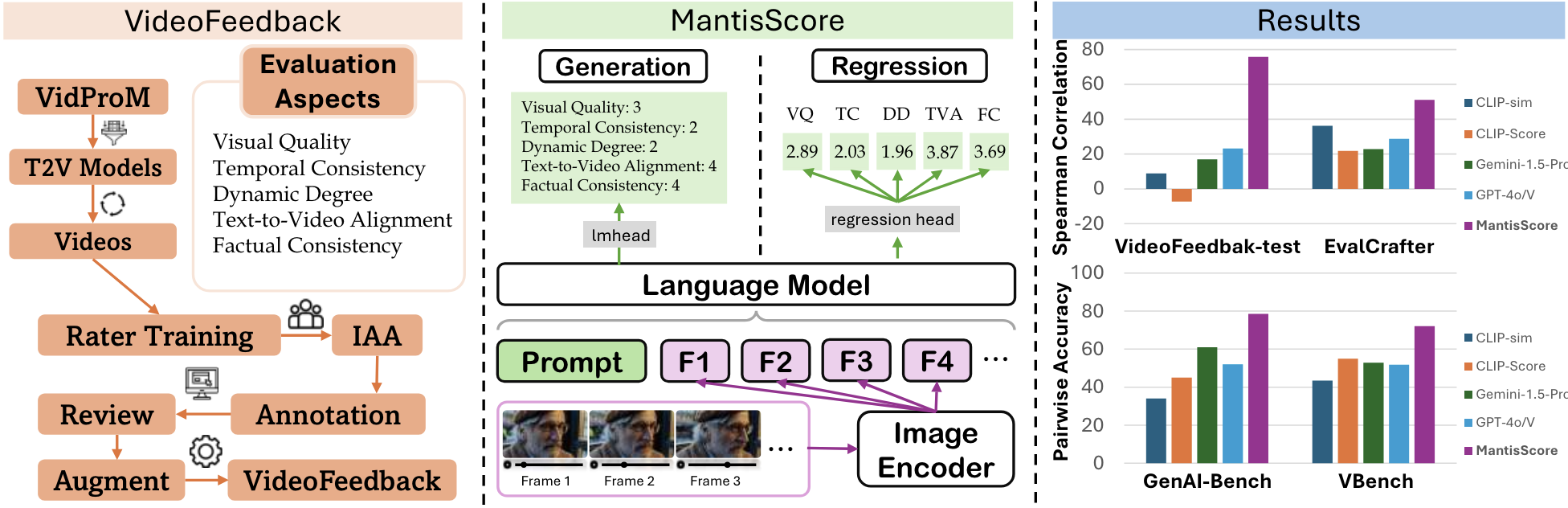

The recent years have witnessed great advances in video generation. However, the development of automatic video metrics is lagging significantly behind. None of the existing metric is able to provide reliable scores over generated videos. The main barrier is the lack of large-scale human-annotated dataset. In this paper, we release VideoFeedback, the first large-scale dataset containing human-provided multi-aspect score over 37.6K synthesized videos from 11 existing video generative models. We train VideoScore (initialized from Mantis) based on VideoFeedback to enable automatic video quality assessment. Experiments show that the Spearman correlation between VideoScore and humans can reach 77.1 on VideoFeedback-test, beating the prior best metrics by about 50 points. Further result on other held-out EvalCrafter, GenAI-Bench, and VBench show that VideoScore has consistently much higher correlation with human judges than other metrics. Due to these results, we believe VideoScore can serve as a great proxy for human raters to (1) rate different video models to track progress (2) simulate fine-grained human feedback in Reinforcement Learning with Human Feedback (RLHF) to improve current video generation models.

@inproceedings{he2024videoscore, title = {VideoScore: Building Automatic Metrics to Simulate Fine-grained Human Feedback for Video Generation}, author = {He, Xuan and Jiang, Dongfu and Zhang, Ge and Ku, Max and Soni, Achint and Siu, Sherman and Chen, Haonan and Chandra, Abhranil and Jiang, Ziyan and Arulraj, Aaran and Wang, Kai and Do, Quy Duc and Ni, Yuansheng and Lyu, Bohan and Narsupalli, Yaswanth and Fan, Rongqi and Lyu, Zhiheng and Lin, Bill Yuchen and Chen, Wenhu}, booktitle = {Proceedings of EMNLP}, month = nov, year = {2024}, address = {Miami, US}, publisher = {Association for Computational Linguistics}, url = {https://openreview.net/forum?id=PcUvvKzULn}, github = {TIGER-AI-Lab/VideoScore}, huggingface = {https://huggingface.co/collections/TIGER-Lab/videoscore-6678c9451192e834e91cc0bf}, twitter = {https://twitter.com/DongfuJiang/status/1805438506137010326}, selected = true, num_co_first_author = {2}, }

- Yujie Lu, Dongfu Jiang, Wenhu Chen, William Yang Wang, Yejin Choi, and Bill Yuchen LinIn Proceedings of NeurIPS 2024 Datasets and Benchmarks Track, Dec 2024

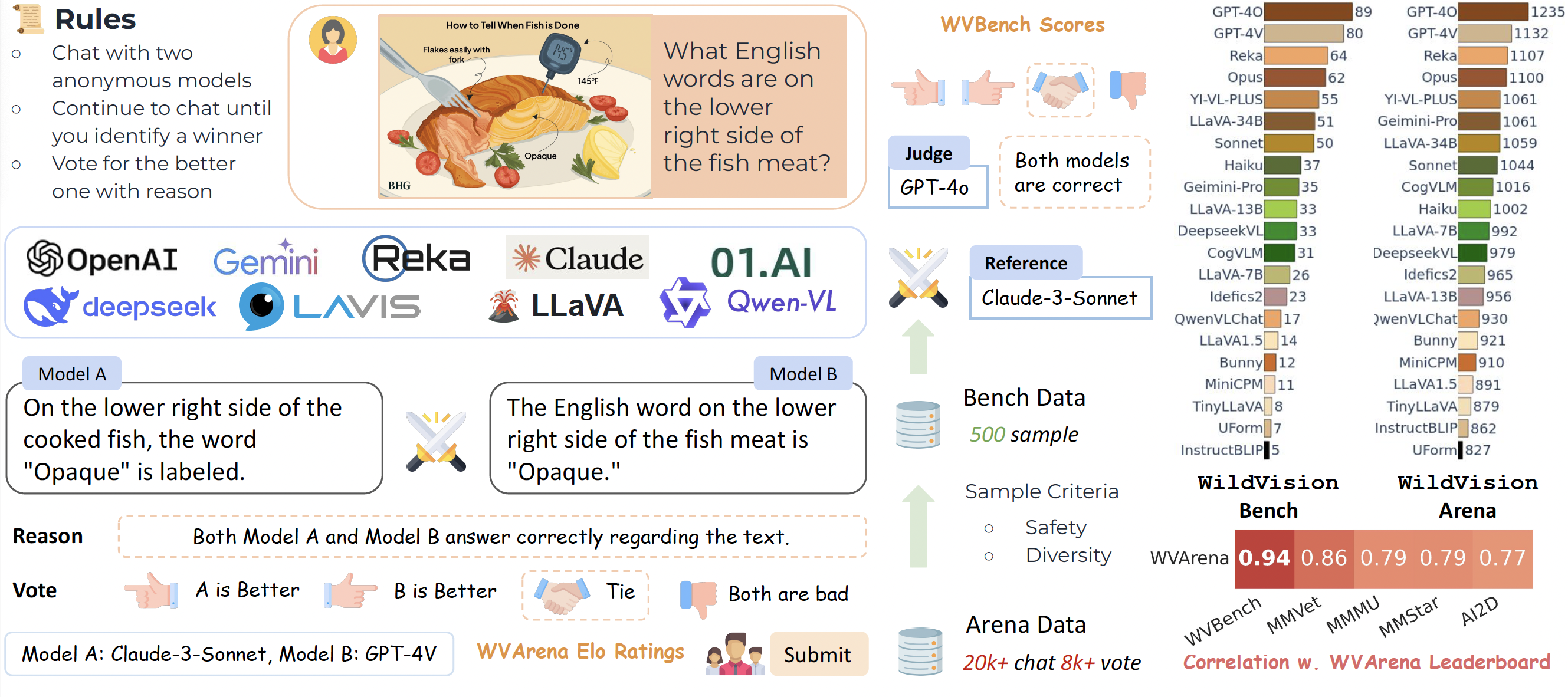

Recent breakthroughs in vision-language models (VLMs) emphasize the necessity of benchmarking human preferences in real-world multimodal interactions. To address this gap, we launched WildVision-Arena (WV-Arena), an online platform that collects human preferences to evaluate VLMs. We curated WV-Bench by selecting 500 high-quality samples from 8,000 user submissions in WV-Arena. WV-Bench uses GPT-4 as the judge to compare each VLM with Claude-3-Sonnet, achieving a Spearman correlation of 0.94 with the WV-Arena Elo. This significantly outperforms other benchmarks like MMVet, MMMU, and MMStar. Our comprehensive analysis of 20K real-world interactions reveals important insights into the failure cases of top-performing VLMs. For example, we find that although GPT-4V surpasses many other models like Reka-Flash, Opus, and Yi-VL-Plus in simple visual recognition and reasoning tasks, it still faces challenges with subtle contextual cues, spatial reasoning, visual imagination, and expert domain knowledge. Additionally, current VLMs exhibit issues with hallucinations and safety when intentionally provoked. We are releasing our chat and feedback data to further advance research in the field of VLMs.

@inproceedings{Lu2024WildVisionEV, title = {WildVision: Evaluating Vision-Language Models in the Wild with Human Preferences}, author = {Lu, Yujie and Jiang, Dongfu and Chen, Wenhu and Wang, William Yang and Choi, Yejin and Lin, Bill Yuchen}, booktitle = {Proceedings of NeurIPS 2024 Datasets and Benchmarks Track}, address = {Vancouver, Canada}, month = dec, year = {2024}, url = {https://openreview.net/forum?id=i92eyFCQHC#discussion}, github = {WildVision-AI/WildVision-Arena}, twitter = {https://twitter.com/billyuchenlin/status/1755207605537120513}, huggingface = {https://huggingface.co/spaces/WildVision/vision-arena}, selected = true, }

- Dongfu Jiang*, Max Ku*, Tianle Li*, Yuansheng Ni, Shizhuo Sun, Rongqi Fan, and Wenhu ChenIn Proceedings of NeurIPS 2024 Datasets and Benchmarks Track, Dec 2024

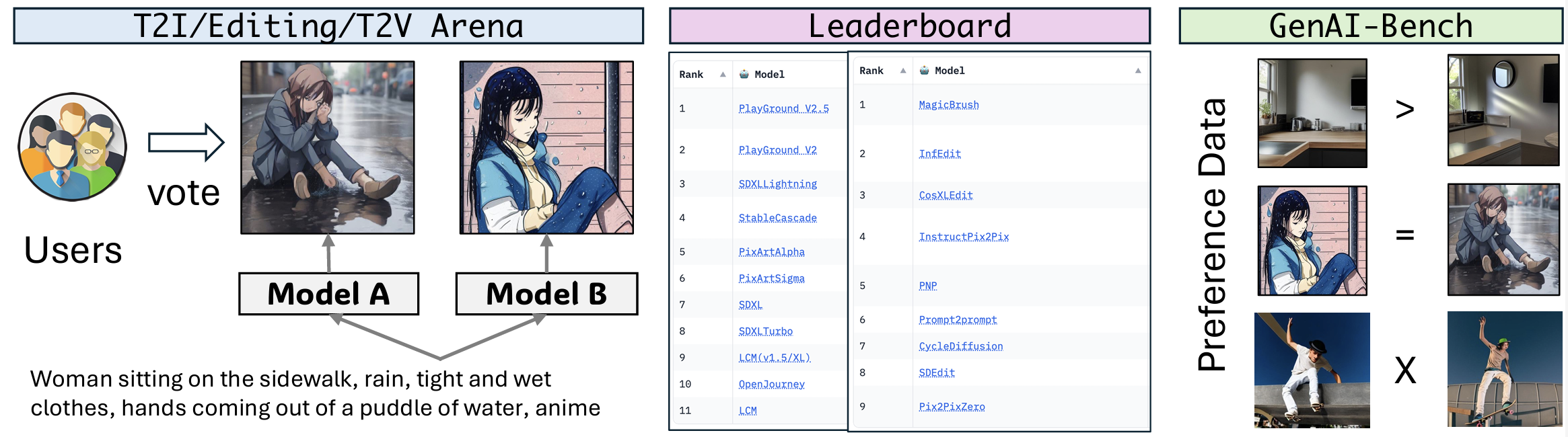

Generative AI has made remarkable strides to revolutionize fields such as image and video generation. These advancements are driven by innovative algorithms, architecture, and data. However, the rapid proliferation of generative models has highlighted a critical gap: the absence of trustworthy evaluation metrics. Current automatic assessments such as FID, CLIP, FVD, etc often fail to capture the nuanced quality and user satisfaction associated with generative outputs. This paper proposes an open platform \arena to evaluate different image and video generative models, where users can actively participate in evaluating these models. By leveraging collective user feedback and votes, \arena aims to provide a more democratic and accurate measure of model performance. It covers three arenas for text-to-image generation, text-to-video generation, and image editing respectively. Currently, we cover a total of 27 open-source generative models. \arena has been operating for four months, amassing over 6000 votes from the community. We describe our platform, analyze the data, and explain the statistical methods for ranking the models. To further promote the research in building model-based evaluation metrics, we release a cleaned version of our preference data for the three tasks, namely GenAI-Bench. We prompt the existing multi-modal models like Gemini, GPT-4o to mimic human voting. We compute the correlation between model voting with human voting to understand their judging abilities. Our results show existing multimodal models are still lagging in assessing the generated visual content, even the best model GPT-4o only achieves a Pearson correlation of 0.22 in quality subscore, and behave like random guessing in others.

@inproceedings{Jiang2024GenAIAA, title = {GenAI Arena: An Open Evaluation Platform for Generative Models}, author = {Jiang, Dongfu and Ku, Max and Li, Tianle and Ni, Yuansheng and Sun, Shizhuo and Fan, Rongqi and Chen, Wenhu}, booktitle = {Proceedings of NeurIPS 2024 Datasets and Benchmarks Track}, address = {Vancouver, Canada}, month = dec, year = {2024}, url = {https://openreview.net/forum?id=0Gmi8TkUC7#discussion}, github = {TIGER-AI-Lab/GenAI-Arena}, huggingface = {https://huggingface.co/spaces/TIGER-Lab/GenAI-Arena}, selected = true, num_co_first_author = {3}, }

- Dongfu Jiang, Xuan He, Huaye Zeng, Cong Wei, Max W.F. Ku, Qian Liu, and Wenhu ChenTransactions on Machine Learning Research, Dec 2024

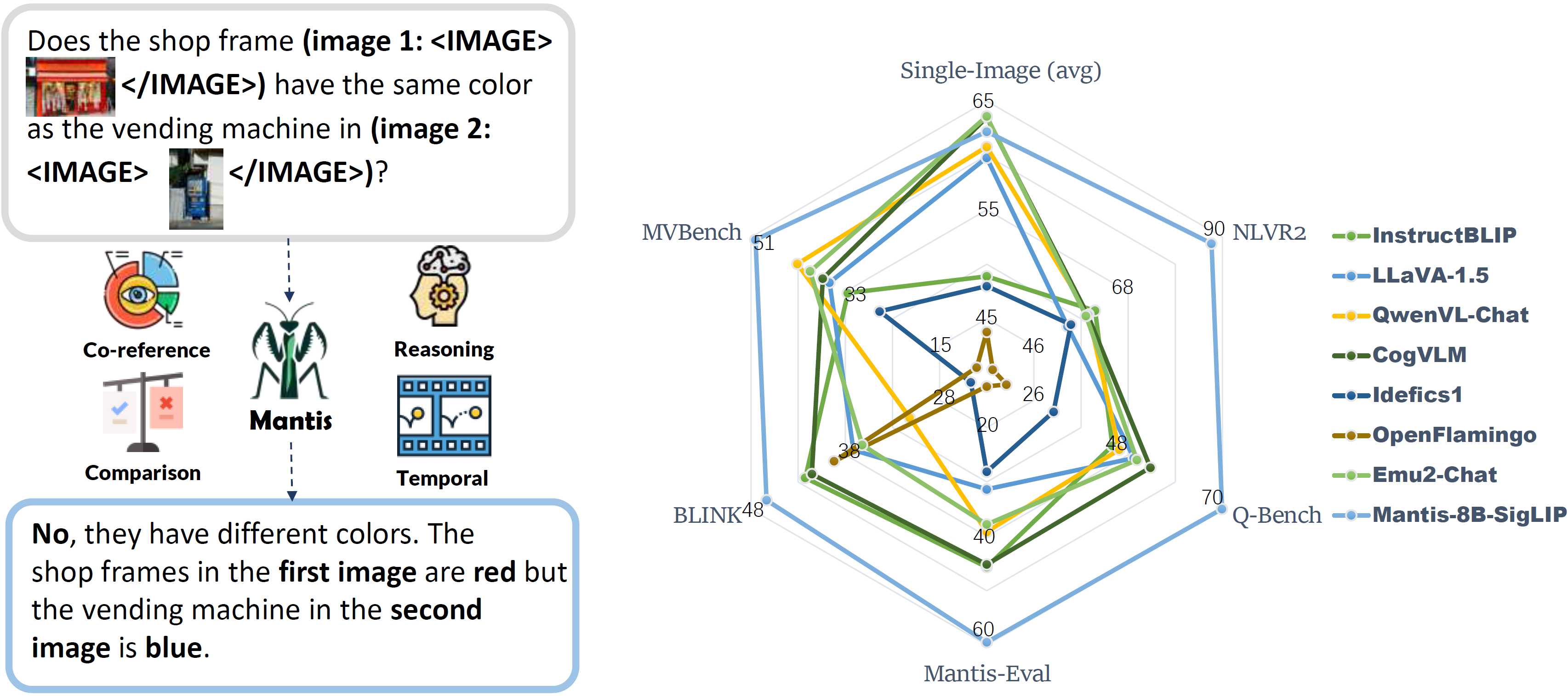

Large multimodal models (LMMs) have shown great results in single-image vision language tasks. However, their abilities to solve multi-image visual language tasks is yet to be improved. The existing LMMs like OpenFlamingo, Emu2, Idefics gain their multi-image ability through pre-training on hundreds of millions of noisy interleaved image-text data from the web, which is neither efficient nor effective. In this paper, we aim to build strong multi-image LMMs via instruction tuning with academic-level resources. Therefore, we meticulously construct Mantis-Instruct containing 721K multi-image instruction data to train a family of models Mantis. The instruction tuning empowers Mantis with different multi-image skills like co-reference, comparison, reasoning, and temporal understanding. We evaluate Mantis on five multi-image benchmarks and seven single-image benchmarks. Mantis-SigLIP can achieve SoTA results on all the multi-image benchmarks and beat the strongest multi-image baseline, Idefics2-8B by an average of 11 absolute points. Notably, Idefics2-8B was pre-trained on 140M interleaved multi-image data, which is 200x larger than Mantis-Instruct. We observe that Mantis performs equivalently well on the held-in and held-out benchmarks, which shows its generalization ability. Notably, we found that Mantis can even match the performance of GPT-4V on multi-image benchmarks. We further evaluate Mantis on single-image benchmarks and demonstrate that Mantis also maintains a strong single-image performance on par with CogVLM and Emu2. Our results show that multi-image abilities are not necessarily gained through massive pre-training, instead, it can be gained by the low-cost instruction tuning. Our work provides new perspectives on how to improve LMMs’ multi-image abilities.

@article{Jiang2024MANTISIM, title = {MANTIS: Interleaved Multi-Image Instruction Tuning}, author = {Jiang, Dongfu and He, Xuan and Zeng, Huaye and Wei, Cong and Ku, Max W.F. and Liu, Qian and Chen, Wenhu}, journal = {Transactions on Machine Learning Research}, year = {2024}, eprint = {2405.01483}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, url = {https://arxiv.org/abs/2405.01483}, github = {TIGER-AI-Lab/Mantis}, twitter = {https://twitter.com/DongfuJiang/status/1786552974598078677}, huggingface = {https://huggingface.co/collections/TIGER-Lab/mantis-6619b0834594c878cdb1d6e4}, selected = true, }

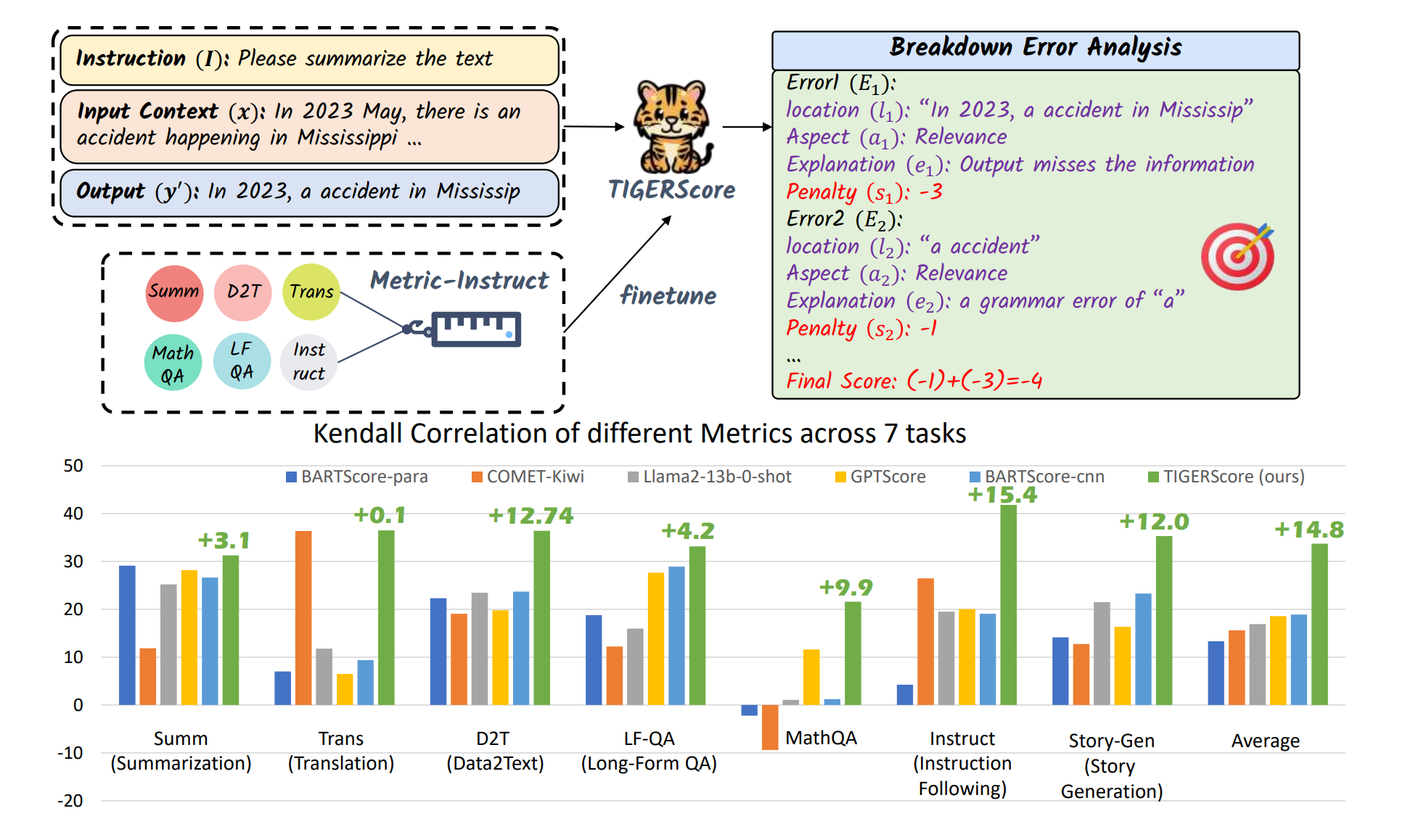

- Dongfu Jiang*, Yishan Li*, Ge Zhang, Wenhao Huang, Bill Yuchen Lin, and Wenhu ChenTransactions on Machine Learning Research (TMLR), May 2024

We present TIGERScore, a \textbfTrained metric that follows \textbfInstruction \textbfGuidance to perform \textbfExplainable, and \textbfReference-free evaluation over a wide spectrum of text generation tasks. Different from other automatic evaluation methods that only provide arcane scores, TIGERScore is guided by natural language instruction to provide error analysis to pinpoint the mistakes in the generated text. Our metric is based on LLaMA-2, trained on our meticulously curated instruction-tuning dataset MetricInstruct which covers 6 text generation tasks and 23 text generation datasets. The dataset consists of 42K quadruple in the form of (instruction, input, system output error analysis). We collected the ‘system outputs’ through from a large variety of models to cover different types of errors. To quantitatively assess our metric, we evaluate its correlation with human ratings on 5 held-in datasets, 2 held-out datasets and show that \metricname can achieve the open-source SoTA correlation with human ratings across these datasets and almost approaches GPT-4 evaluator. As a reference-free metric, its correlation can even surpass the best existing reference-based metrics. To further qualitatively assess the rationale generated by our metric, we conduct human evaluation on the generated explanations and found that the explanations are 70.8% accurate. Through these experimental results, we believe \metricname demonstrates the possibility of building universal explainable metrics to evaluate any text generation task.

@article{jiang2024tigerscore, title = {{TIGERS}core: Towards Building Explainable Metric for All Text Generation Tasks}, author = {Jiang, Dongfu and Li, Yishan and Zhang, Ge and Huang, Wenhao and Lin, Bill Yuchen and Chen, Wenhu}, journal = {Transactions on Machine Learning Research (TMLR)}, year = {2024}, month = may, selected = true, github = {TIGER-AI-Lab/TIGERScore}, twitter = {https://twitter.com/DongfuJiang/status/1735508082510168425}, huggingface = {https://huggingface.co/collections/TIGER-Lab/tigerscore-657020bfae61260b6131f1ca}, issn = {2835-8856}, url = {https://openreview.net/forum?id=EE1CBKC0SZ}, num_co_first_author = {2} }

2023

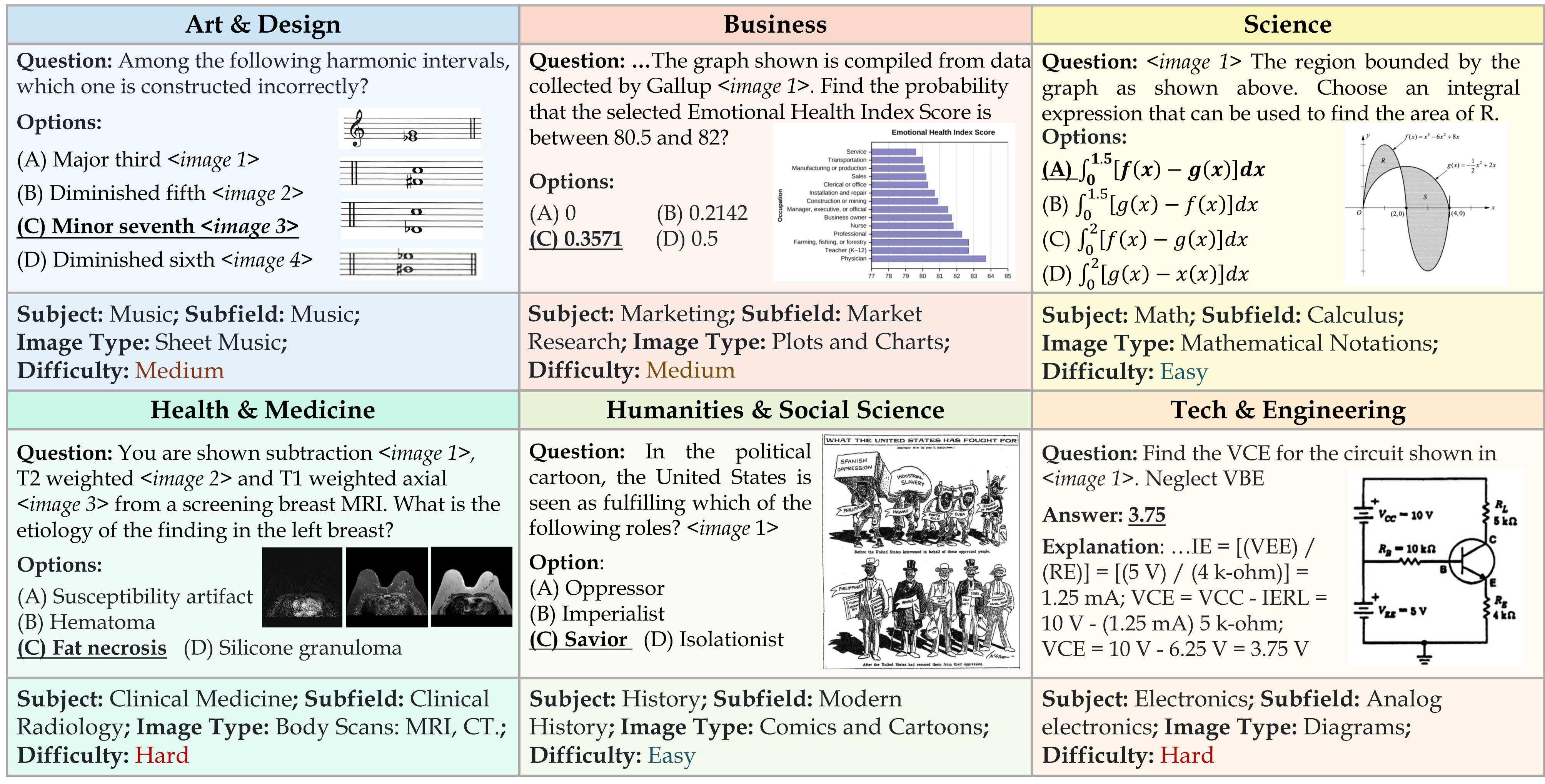

- Xiang Yue*, Yuansheng Ni*, Kai Zhang*, Tianyu Zheng*, Ruoqi Liu, Ge Zhang, Samuel Stevens, Dongfu Jiang, and 14 more authorsIn Proceedings of CVPR oral, Jun 2023

We introduce MMMU: a new benchmark designed to evaluate multimodal models on massive multi-discipline tasks demanding college-level subject knowledge and deliberate reasoning. MMMU includes 11.5K meticulously collected multimodal questions from college exams quizzes and textbooks covering six core disciplines: Art & Design Business Science Health & Medicine Humanities & Social Science and Tech & Engineering. These questions span 30 subjects and 183 subfields comprising 30 highly heterogeneous image types such as charts diagrams maps tables music sheets and chemical structures. Unlike existing benchmarks MMMU focuses on advanced perception and reasoning with domain-specific knowledge challenging models to perform tasks akin to those faced by experts. The evaluation of 28 open-source LMMs as well as the proprietary GPT-4V(ision) and Gemini highlights the substantial challenges posed by MMMU. Even the advanced GPT-4V and Gemini Ultra only achieve accuracies of 56% and 59% respectively indicating significant room for improvement. We believe MMMU will stimulate the community to build next-generation multimodal foundation models towards expert artificial general intelligence.

@inproceedings{Yue2023MMMUAM, title = {MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI}, author = {Yue, Xiang and Ni, Yuansheng and Zhang, Kai and Zheng, Tianyu and Liu, Ruoqi and Zhang, Ge and Stevens, Samuel and Jiang, Dongfu and Ren, Weiming and Sun, Yuxuan and Wei, Cong and Yu, Botao and Yuan, Ruibin and Sun, Renliang and Yin, Ming and Zheng, Boyuan and Yang, Zhenzhu and Liu, Yibo and Huang, Wenhao and Sun, Huan and Su, Yu and Chen, Wenhu}, month = jun, address = {Seattle, US}, year = {2023}, booktitle = {Proceedings of CVPR <span style="color: red; font-weight: bold;">oral</span>}, url = {https://arxiv.org/abs/2311.16502}, github = {MMMU-Benchmark/MMMU}, twitter = {https://twitter.com/xiangyue96/status/1729698316554801358}, huggingface = {https://huggingface.co/datasets/MMMU/MMMU}, selected = true, num_co_first_author = {4} }

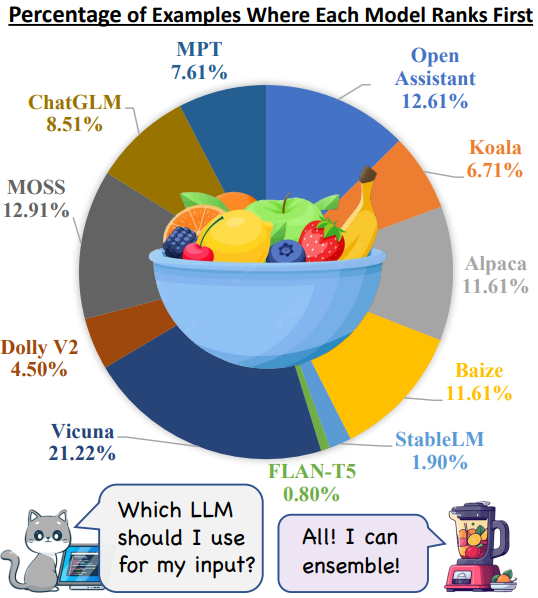

- Dongfu Jiang, Xiang Ren, and Bill Yuchen LinIn Proceedings of ACL, Jul 2023

We present LLM-Blender, an ensembling framework designed to attain consistently superior performance by leveraging the diverse strengths of multiple open-source large language models (LLMs). Our framework consists of two modules: PairRanker and GenFuser, addressing the observation that optimal LLMs for different examples can significantly vary. PairRanker employs a specialized pairwise comparison method to distinguish subtle differences between candidate outputs. It jointly encodes the input text and a pair of candidates, using cross-attention encoders to determine the superior one. Our results demonstrate that PairRanker exhibits the highest correlation with ChatGPT-based ranking. Then, GenFuser aims to merge the top-ranked candidates, generating an improved output by capitalizing on their strengths and mitigating their weaknesses. To facilitate large-scale evaluation, we introduce a benchmark dataset, MixInstruct, which is a mixture of multiple instruction datasets featuring oracle pairwise comparisons. Our LLM-Blender significantly outperform individual LLMs and baseline methods across various metrics, establishing a substantial performance gap.

@inproceedings{jiang-etal-2023-llm, title = {{LLM}-Blender: Ensembling Large Language Models with Pairwise Ranking and Generative Fusion}, author = {Jiang, Dongfu and Ren, Xiang and Lin, Bill Yuchen}, booktitle = {Proceedings of ACL}, month = jul, year = {2023}, address = {Toronto, Canada}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2023.acl-long.792}, doi = {10.18653/v1/2023.acl-long.792}, pages = {14165--14178}, selected = true, github = {yuchenlin/LLM-Blender}, twitter = {https://twitter.com/billyuchenlin/status/1668666357058277377}, huggingface = {https://huggingface.co/llm-blender}, }